#5 - Square's Auth Outage

Lessons on Redis, Authentication, and Retries from a Square Post Mortem

Hey friends,

On Monday, I mentioned I’d send a bonus issue this week - and so, we’re back at it with post number two ✌️.

🧪 This post is an experiment, we’re diving into a public post-mortem and making sense of what happened. Unfortunately, information available to the public is usually pretty limited, but we’ll do our best to play detective - and I think there’s still a LOT we can learn.

Alright! Let’s dig in to Square’s Auth Outage on March 16, 2017

10:02 AM - March 16, 2017

Square is down. Nobody can make payments. Merchants are getting logged out and can’t log back in. Business at coffee shops and restaurants across the country grinds to a halt. This is bad.

Cashiers look at their Square devices with utter bewilderment. “Did I just do something wrong?” The answer is no, they didn’t - there’s been a system failure in Square’s Authentication system. But no one knows that yet.

Meanwhile at Square HQ, it’s the quiet before the storm.

4 minutes later…

The first warning emerges at Square. The on-call engineer for “Roster”, the internal service responsible for customer identity, gets paged.

There was a deploy to Roster just a few minutes before…. shit.

2 minutes later…

Roster’s on-call engineer has barely had a chance to examine the git log when an alert hits inboxes across the company: Square’s API is down. This is a bonafide company-wide outage.

At about the same time, engineers from across the company mention that they’re experiencing failures when calling “Multipass” - the internal service responsible for customer authentication.

And thus, the engineers of Roster (customer identity) and Multipass (customer authentication) are now in the hot seat.

The Response

In an outage, engineers are taught (either by a mentor or by the unforgiving lessons of experience) to mitigate. As Google puts it: “Stop the bleeding, restore service, and preserve the evidence for root-causing.”

That “stop the bleeding” step usually starts with the most basic tools of the trade:

Rollbacks (deploying a stable version of your code/configurations)

Restarts

In this case, Square engineers tried both. They rolled back Roster to a stable version, but nothing happened. Then they restarted Multipass - and still nothing.

Sadly, the simple fixes didn’t work. In order to stop the bleeding, the engineers had to dig in, and this is what they (eventually) found:

The Real Issue

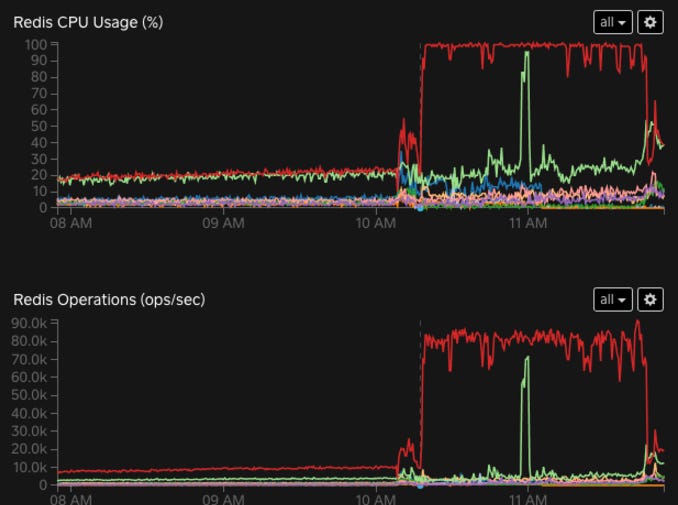

Multipass relies on a Redis cluster for cacheing. The post mortem doesn’t say how Multipass uses Redis, simply that it did, and that something went haywire. Notice the massive spike in Redis Operations below 👇

The engineers went to Redis, used the commands SLOWLOG (to find slow queries) and MONITOR (to get a stream of queries), and identified the queries that were hammering the cache. They took those queries, and used them to track down the code that produced them. Bingo. Bad error retry logic.

Requests over the network can fail. Engineers learn this extremely early on in their careers. In school, in bootcamps, in your personal projects - you may not ever need to do proper error handling over the network. But in production, you’ll get smacked upside the head if you don’t have some retry strategy - it’s critical.

The most basic retry strategy is to retry immediately. But this is suboptimal, because the system you’re sending requests to could be overwhelmed. And if you continue to participate in the overwhelming of the system with your incessant requests - well, you’ve essentially put yourself in an infinite retry loop.

Unfortunately, when the Square engineers looked at their retry code, they found an immediate retry strategy, with a max limit of 500 retries. Their own code was hammering Redis.

A Quick Note on Authentication

I’m about to dive into some conjectures about Multipass, the customer authentication service, and why its Redis cluster was mission critical to EVERYTHING AT SQUARE.

But before I do that, let’s define Authentication and compare it to its oft confused sibling, Authorization.

Authentication - often referred to as AuthN, is all about proving your identity. It’s like that Lupe Fiasco song: 🎤🎶 “If you are, what you say you are - a superstar, then have no fear, type your password here”. An authentication system cares about you proving your identity, and once the system is happy, you now have access.

Authorization - often referred to as AuthZ, is all about determining your access level. Ok, we get it, you’re legit. But are you a Superstar or a Scrub? Superstars have access to some crazy resources that Scrubs don’t, but they’re both allowed in. We’ll have to check our directory to see what level you are.

Ok, now that we’ve cleared that up, let’s talk about AuthN.

Authentication is typically implemented via a two step process.

Prove it (aka Login) - This is usually done by username/password, but there are other schemes. Sometimes, multiple forms of authentication are required (known as MFA)

Session Token - once you’ve proven you’re authentic, then you’ll be given a session token, which is good for a certain amount of time and allows you to access the service - so long as you have it. It’s how you stay signed in for long periods of time

For this outage, we mostly care about that session token. When we hand out session tokens, we typically have to keep records of those tokens and when they expire. That way, when a user makes a request with their session token, we can do a lookup of tokens from our records, and see if this token is currently valid.

Invalid token? We deny the request.

Valid token? We let the user in.

And thus, the key thing to note here is that we need to store a record of the valid tokens somewhere. And a classic place to store them? A cache… like Redis.

Why Square was (probably) down

When Multipass’s Redis instance hit a CPU utilization of 100%, it got overwhelmed. And most likely, Multipass was relying on Redis to store session tokens. When services across Square were calling Multipass, it probably went something like this:

Other service to Multipass: “Hey, is this user authenticated? This is their token”

Multipass: “Ok, one sec”

Multipass to Redis: “Hey, can you check if this session token is still valid?”

Redis: “Umm, you’ve asked me a bajillion questions already, so that’s gonna take 10 years before I can get to it”

Multipass: “Fine”

Other service to Multipass: “Yo…. it’s been over a minute. I’m gonna end this call and maybe try again later”

Authentication is cross cutting - every user initiated action needs it. So Multipass’s Redis instance going down is a very bad thing. Because Multipass sits in the critical path, it means all requests were failing.

The Fix

While we can’t be certain of what the fix was, there’s a very solid chance it involved one of the following:

Reducing retries - simply going from 500 to 1 would likely shaved off a couple orders of magnitude of requests to Redis.

A smarter retry strategy

a regular interval retry (5 seconds)

an exponential backoff (2s, then 4s, then 8s, etc)

a linearly increasing interval (2s, 4s, 6s, etc.)

random intervals

And once this change was deployed (I’m guessing it was a one line change to reduce retries from 500 to something small), Redis healed. And as the post-mortem says, “Payments began to flow normally!”

1 hour 53 minutes after the outage started, service was restored.

The staff at coffee shops, frustrated beyond belief, could finally operate once again.

Lessons

Here are a few takeaways from this post-mortem:

How to use SLOWLOG and MONITOR to debug Redis

The need to choose a good retry strategy (and a cautionary tale about immediate retry with a high max count)

How stateful authentication sits in the critical path and a failure can bring a business to a halt. (And if you were wondering - yes! There is a stateless way of doing it. See JSON Web Tokens - or JWTs!)

The primary responsibility of engineers during an outage: “Stop the bleeding, restore service, and preserve evidence for root-causing”

But there’s one thing that feels rather unsatisfying about this incident. We never really got a sense for the true root cause. In the post-mortem Square simply says: “Determine if the single datacenter Roster outage exacerbated Multipass-Redis retries”.

We don’t really know why Multipass requests to Redis started failing. Did Roster cause this? Or was the Roster issue just a red herring? The post-mortem mentioned Multipass submitted queries to Redis in a transaction using optimistic locking. Was there a deadlock, or just a lock that was held for a very long time?

Sadly, we won’t know. And unfortunately, that’s often par for the course. Sometimes there’s just not enough evidence to find the rascal that caused the whole mess. Another one that got away. 🕵️♂️

Conclusion

Well, that’s it! I hope you enjoyed this post-mortem deep dive, and maybe you learned a thing or two.

Writing good software is hard, but we can learn a lot from when things go wrong. I appreciate how Square made this post-mortem public. I learned a couple things from reading it (namely, the Redis operations) and thank them for sharing this contribution.

If you want to see more of these post-mortem deep dives, let me know - by either giving this post a ❤️ or leaving a comment!

Till next time,

Phil

very cool. it's interesting to see a concrete example of auth flow for a multi-client service and how it can go awry. I've enjoyed reading your articles so far, so keep up the good work.